TL;DR

- GLM 4.7 delivers better ROI for most SaaS use cases due to significantly lower input and output costs, predictable reasoning behavior, and multi-provider flexibility.

- GPT 5.2 excels in complex, high-stakes tasks, such as advanced coding, deep reasoning, and long-context analysis, but its premium pricing can erode margins at scale.

- Output-heavy SaaS workloads amplify cost differences, making GPT 5.2 far more expensive as usage grows compared to GLM 4.7.

- Median latency, not peak benchmarks, drives user experience, and GLM 4.7’s consistent response speed improves retention and conversion in real products.

- The smartest SaaS teams use a hybrid approach, reserving GPT 5.2 for high-risk workflows while running high-volume automation on GLM 4.7 to protect unit economics.

Introduction

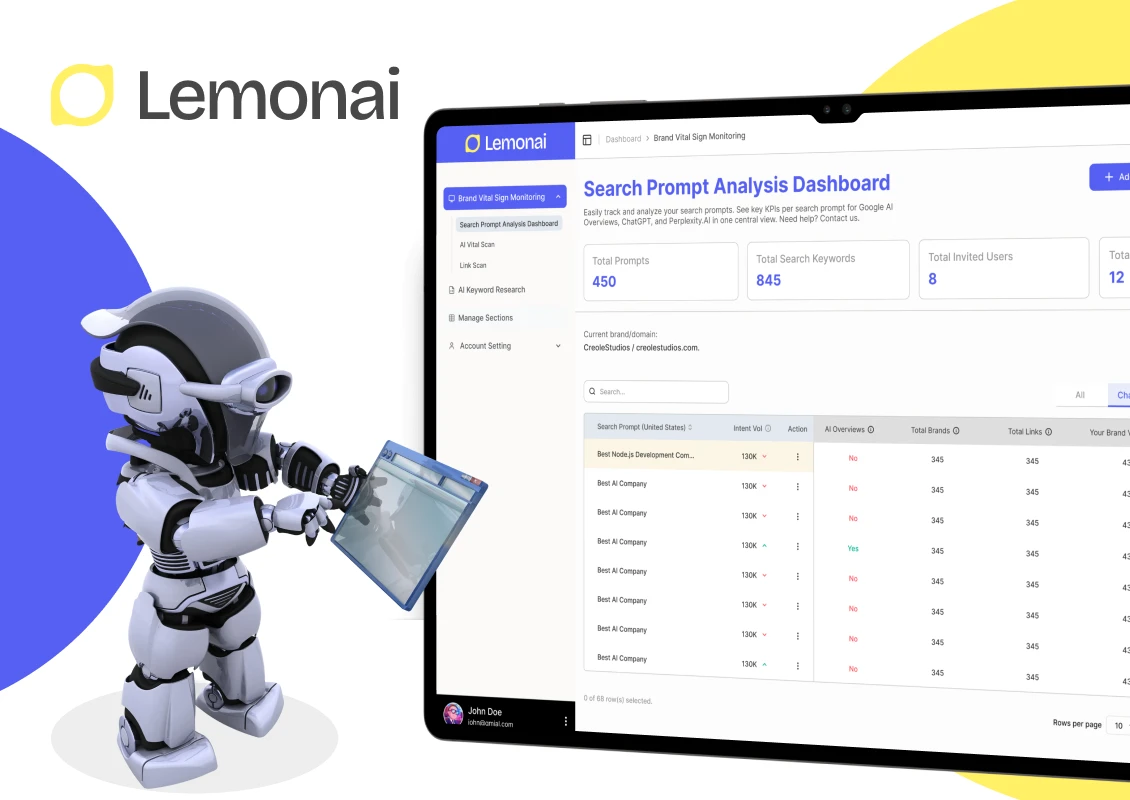

For SaaS teams, choosing an AI model is no longer a side experiment or a developer preference. It is a strategic and financial decision that directly impacts margins, scalability, and long-term product viability. In practice, many teams discover this only after launch, when AI usage grows and costs begin to surface in ways benchmarks never predicted. This is often the point where guidance from a generative ai development company becomes valuable, helping teams evaluate models not just on capability, but on real production factors like cost efficiency, latency, reliability, and operational overhead.

Benchmarks may look impressive on paper, but they rarely explain why AI costs quietly balloon after launch or why certain AI features fail to justify their ongoing spend. What matters in real SaaS environments is how consistently a model performs under load, how predictable the costs remain as usage scales, and how much engineering effort is required to keep AI workflows stable over time.

This comparison of GLM 4.7 vs GPT 5.2 focuses on the one question SaaS builders actually care about: Which model delivers better return on investment in real, production-grade SaaS environments?

Estimate Your Real AI Costs

See how GLM 4.7 and GPT 5.2 impact your SaaS margins as usage scales.

GLM 4.7 Overview: ROI-First AI for SaaS Builders

GLM 4.7 is a reasoning-focused large language model built for cost-efficient execution at scale rather than headline benchmark dominance. Its lower inference costs, predictable multi-step behavior, and open, multi-provider availability make it well suited for SaaS products where AI features are used frequently and margins must remain intact.

Key Characteristics of GLM 4.7

- Reasoning-based execution that handles multi-step tasks more predictably

- Significantly lower input and output token costs

- Open licensing, reducing long-term vendor dependency

- Availability across multiple providers

Why SaaS Builders Care About GLM 4.7

For teams building AI-powered features that users interact with daily, predictability matters more than theoretical capability. GLM 4.7 offers:

- Lower and more predictable AI cost per user

- Better suitability for output-heavy workloads like chat, summaries, and agents

- Reduced engineering overhead from fewer retries and simpler orchestration

- Healthier gross margins as usage scales

When GLM 4.7 Makes Economic Sense

GLM 4.7 is a strong fit when:

- AI usage is frequent and high-volume

- Margins matter more than peak intelligence

- AI supports repeatable workflows like support, onboarding, or internal automation

- You want flexibility in hosting and provider choice

For founders worried about AI becoming a margin sink, GLM 4.7 is often the safer default.

GPT 5.2 Overview: Premium Intelligence for High-Stakes SaaS Use Cases

GPT 5.2 is a frontier-grade language model optimized for maximum reasoning depth and long-context performance. It is designed for high-stakes, complex workloads where accuracy and capability outweigh cost considerations, making it best suited for premium SaaS features, advanced analytics, and tasks where failure carries significant business risk.

Key Characteristics of GPT 5.2

- Strong performance across advanced math, science, and coding benchmarks

- Large context window for complex document and research tasks

- Adaptive execution optimized for difficult reasoning

- Proprietary, single-provider ecosystem

Why SaaS Teams Still Choose GPT 5.2

Despite higher costs, GPT 5.2 can deliver ROI when the cost of failure is higher than the cost of inference. It provides:

- Higher accuracy for complex reasoning tasks

- Better results in advanced coding and analytical workflows

- Greater confidence in high-risk or compliance-sensitive outputs

When GPT 5.2 Justifies Its Cost

GPT 5.2 makes sense when:

- AI output directly affects revenue, compliance, or legal risk

- Features are sold as premium or enterprise tiers

- Tasks involve long-context reasoning that cannot be simplified with RAG

- Accuracy matters more than throughput

For most SaaS teams, GPT 5.2 should be a scalpel, not a hammer.

What ROI Really Means for AI-Powered SaaS

In AI-powered SaaS products, ROI is not a single metric. It is the combined effect of cost efficiency, user experience, reliability, and long-term operational effort. Evaluating AI models through this lens helps teams avoid decisions that look strong in demos but weaken the business over time.

ROI in AI-powered SaaS can be broken down into four practical levers.

Cost per successful task

The real cost of AI is not the price per token, but the cost to complete a task end-to-end without human intervention. Output-heavy pricing models magnify this difference quickly, especially in chat, summarization, and agent-based workflows where responses generate far more tokens than inputs.

Speed and user experience

Latency directly influences activation, retention, and user trust. Slow or inconsistent AI responses feel broken to users, even when the output is technically correct. In real SaaS products, faster responses often deliver higher perceived value than marginal gains in accuracy.

Quality and reliability

Higher task success rates reduce retries, clarifications, and escalations to human teams. Each failed or partially correct response increases both token consumption and support overhead, quietly eroding ROI at scale.

Engineering and vendor overhead

Complex orchestration, frequent prompt tuning, monitoring systems, and vendor lock-in introduce long-term costs that rarely appear in benchmark charts. These hidden expenses accumulate over time and can outweigh the benefits of higher-performing models if not carefully managed.

Why ROI Is the Right Comparison Metric for SaaS Teams

Benchmarks measure what an AI model can do in controlled test environments. ROI measures what your business can sustainably run in production. For SaaS teams, this distinction is critical because AI performance does not exist in isolation. It compounds across users, workflows, and months of usage.

When evaluating AI models, SaaS teams must account for more than raw accuracy scores. Real ROI is shaped by:

- Retry rates and failed responses, which increase token usage and human intervention

- Engineering time spent on prompt tuning, fallbacks, and monitoring, which becomes an ongoing operational cost

- Latency-driven user drop-off, especially in chat, agent, and onboarding flows where responsiveness impacts activation and retention

- Cost growth as usage scales, where small per-request differences turn into significant margin erosion at higher volumes

In practice, an AI model that performs marginally better on benchmarks but costs several times more per successful task can quietly undermine unit economics. Over time, this leads to AI features that look impressive but are difficult to justify financially, especially as a SaaS product grows.

Pricing Comparison: What Happens to Your Margins at Scale

Across all provided datasets, the pricing gap between GLM 4.7 and GPT 5.2 is not marginal. It is structural, and it becomes more pronounced as SaaS usage grows.

GLM 4.7 pricing (depending on provider):

- Input tokens: approximately $0.40 to $0.60 per 1M tokens

- Output tokens: approximately $1.50 to $2.20 per 1M tokens

GPT 5.2 pricing:

- Input tokens: $1.75 per 1M tokens

- Output tokens: $14.00 per 1M tokens

For most AI-powered SaaS products, output tokens account for the majority of usage. Chat responses, summaries, agent actions, and generated content all produce far more output than input. In these output-heavy workloads, GPT 5.2’s pricing multiplies costs rapidly compared to GLM 4.7.

To put this into perspective, even a modest difference in per-request output cost compounds significantly when:

- AI features are used daily by active users

- Agents execute multiple steps per interaction

- Usage grows across teams, accounts, or regions

For early-stage founders, this pricing gap directly affects burn rate and runway.

For growth-stage SaaS teams, it impacts gross margin and pricing flexibility.

For post-PMF companies, it influences long-term unit economics and valuation, especially when AI becomes a core product capability.

In practice, a model that is several times more expensive on output tokens can quietly turn a promising AI feature into a long-term margin liability if not used selectively.

Speed, Latency, and UX: The Revenue Impact Most Teams Miss

Median latency matters more than peak performance. In real products:

- Users abandon slow chat responses

- Multi-step agents amplify delays

- Slower AI feels unreliable, even when correct

GLM 4.7 often delivers better user experience because consistent response speed matters more than peak performance. In real SaaS products like support chat or onboarding flows, users abandon slow responses, and multi-step agents make delays even more noticeable. GLM 4.7’s faster and more predictable median latency makes AI feel responsive and reliable, which directly improves user trust, retention, and conversion.

Intelligence and Reliability: Avoiding Costly Failures

GPT 5.2 leads in several advanced benchmarks, especially in complex reasoning and coding tasks. This matters when:

- A wrong answer has high business cost

- Outputs are used directly without human review

GLM 4.7, however, shows stronger intelligence efficiency when cost is considered. For many SaaS workflows, especially structured automation, intelligence-per-dollar matters more than absolute intelligence.

The key question is not “Which model is smarter?” but “How smart does this workflow actually need to be?”

Reasoning vs Non-Reasoning Models: Engineering Cost Implications

GLM 4.7 often overshadows GPT 5.2 in engineering efficiency because its reasoning-based execution handles multi-step workflows more reliably without heavy prompt engineering. For example, in a support automation flow where the AI must read a ticket, identify intent, fetch relevant data, and generate a response, GLM 4.7 is more likely to complete the steps correctly in one pass. GPT 5.2 may require additional prompt instructions, retry logic, or guardrails to avoid partial or inconsistent outputs. Over time, this extra scaffolding increases maintenance and engineering effort, whereas GLM 4.7’s stability reduces ongoing operational cost and improves ROI for lean SaaS teams.

Context Window: Paying for Capacity You May Not Monetize

One of the most visible differences between GPT 5.2 and GLM 4.7 is context window size. GPT 5.2 supports input contexts of up to 400,000 tokens, while GLM 4.7 supports approximately 200,000 tokens. On paper, this appears to be a decisive advantage.

This additional capacity is valuable for specific use cases such as full contract analysis, large research reports, or technical documentation that spans hundreds of pages. For SaaS products operating in legal, research, or compliance-heavy domains, the ability to process extremely long inputs in a single pass can directly improve user experience and justify the cost.

However, for most AI-powered SaaS products, real-world inputs are far smaller. Customer support tickets, onboarding conversations, sales chats, and workflow automations typically operate well below 10,000 to 20,000 tokens per interaction. In these scenarios, a 200,000-token context window already provides significant headroom.

From an ROI standpoint, paying for a 400,000-token context window when your product consistently uses less than 5 percent of that capacity quietly erodes margins. Larger context windows increase inference cost even when the extra capacity is unused. Well-designed retrieval pipelines and chunking strategies can satisfy most context requirements at a fraction of the cost, without overpaying for theoretical capacity that does not translate into measurable business value.

Provider Availability and Operational Risk

While GPT-5.2 has a significantly larger user base, it is available only through OpenAI’s own infrastructure and APIs, meaning all usage is tied to a single provider for pricing, regions, and reliability. GLM-4.7, in contrast, can be accessed through multiple third-party inference providers, allowing teams to choose where the model runs based on cost, latency, or regional availability. For SaaS teams, this difference is not about popularity, but about flexibility and risk. Multiple providers reduce dependency on a single vendor and give more control over operational costs and uptime as AI usage scales.

Multi-provider access improves:

- Resilience and uptime

- Regional latency optimization

- Cost negotiation

Operational reliability directly impacts customer trust, especially for AI-first products.

ROI Recommendation Matrix for SaaS Builders

GLM 4.7 is usually the better ROI choice for:

- Customer support and helpdesk agents

- Sales and onboarding assistants

- Workflow automation and background agents

- Cost-sensitive or AI-heavy SaaS products

GPT 5.2 is better suited for:

- Advanced coding and developer tools

- High-risk reasoning tasks

- Long-context research features

- Premium enterprise offerings

Most successful teams use both strategically.

Validate Your AI Model Choice

Get clarity on which model fits your workflows, risk level, and growth plans.

How SaaS Teams Should Roll Out AI Without Killing ROI

- Start with a hybrid model strategy

- Track cost per successful outcome, not per token

- Measure retries, escalations, and latency

- Reserve premium models for high-stakes tasks

- Re-evaluate model fit as pricing and performance evolve

AI should be treated as a variable cost center, not a fixed bet.

Final Verdict for SaaS Builders

There is no single best AI model. The right choice depends on how your SaaS product scales, where accuracy truly matters, and how much cost your margins can absorb. GLM 4.7 often delivers better ROI for high-volume, cost-sensitive workflows, while GPT 5.2 is better reserved for complex, high-stakes tasks where premium performance justifies the spend.

For SaaS teams, the real value lies in aligning AI model decisions with unit economics and long-term scalability. Working with a generative ai development company can help ensure these decisions are grounded in real production metrics rather than benchmarks alone.

If you would like expert guidance on choosing and deploying the right AI models for your product, book a 30 minute free consultation to review your use cases, costs, and ROI risks before you scale.

30 mins free Consulting

30 mins free Consulting

11 min read

11 min read

Canada

Canada

Hong Kong

Hong Kong

Love we get from the world

Love we get from the world