TL;DR:

- @react-native-voice/voice often fails on older Android versions due to native API and permission issues.

- Replacing it with react-native-audio-recorder-player ensures consistent mic access and better control over audio recording.

- Recorded audio can be transcribed using Whisper, Google Cloud, or any STT service for accurate results.

- The solution works offline, supports Android 6.0+, and removes dependency on flaky native speech services.

- For production-ready implementation, collaborate with expert React Native developers to optimize performance and handle backend integration.

Introduction

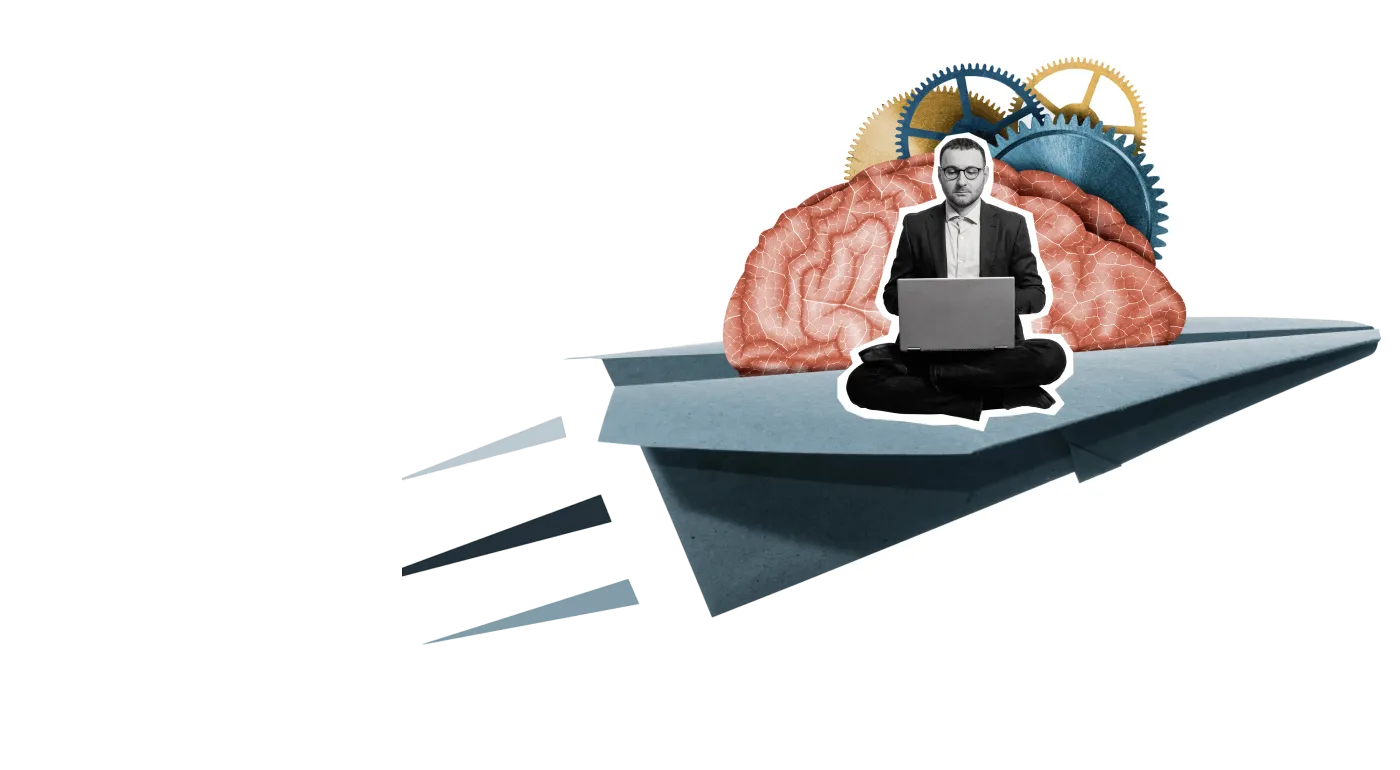

Voice input is becoming a core feature in modern mobile apps, enabling hands-free commands, searches, and accessibility options that enhance user experience. React Native developers typically rely on the @react-native-voice/voice library for speech-to-text capabilities—but it often struggles on older Android versions (like 8.0 and 8.1), where the microphone may not activate, or speech results fail to return.

If you’ve faced such frustrations, you’re not alone. A more stable workaround is to replace @react-native-voice/voice with react-native-audio-recorder-player, which records raw audio directly from the microphone. You can then send that audio to a transcription service like Whisper or Google Cloud for accurate speech recognition. This approach ensures better compatibility, flexibility, and control across devices.

For teams building robust, voice-enabled applications, partnering with a React Native app development company experienced in optimizing native modules can help implement this solution efficiently—ensuring smooth performance, cross-platform reliability, and future-ready voice functionality.

Build a Voice-Enabled React Native App That Actually Works

Integrate reliable voice recording and transcription features without depending on unstable native APIs. Get expert help to implement Audio Recorder Player the right way.

Why @react-native-voice/voice Fails on Older Android Versions

The @react-native-voice/voice library wraps native Android and iOS speech services. On older Android versions, especially 8.1 and below, several issues arise:

- The microphone doesn’t activate properly.

- onSpeechResults or onSpeechEnd callbacks never trigger.

- The app crashes due to permission inconsistencies.

- Internal Google Speech Service may not be available.

As a result, many developers experience inconsistent behavior across devices, making the app unreliable.

Meet react-native-audio-recorder-player

This lightweight library handles audio recording and playback—but lets you handle the transcription your way.

Key Benefits:

- Works well on older Android versions

- Saves audio in WAV format (perfect for transcription)

- Full control of recording workflow

- Zero dependency on platform speech APIs

Project Setup

Install the library:

npm install react-native-audio-recorder-player

npx pod-install

Add these to your AndroidManifest.xml:

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE" />Recording Audio from the Mic

import AudioRecord from 'react-native-audio-record';

const initAudioRecord = () => {

AudioRecord.init({

sampleRate: 16000,

channels: 1,

bitsPerSample: 16,

wavFile: 'user_audio.wav',

});

};

const startRecording = () => AudioRecord.start();

const stopRecording = async () => {

const audioFile = await AudioRecord.stop();

console.log('Recorded file path:', audioFile);

// Upload this WAV file to backend

};Uploading & Transcribing Audio

const uploadAudio = async (filePath: string) => {

const formData = new FormData();

formData.append('file', {

uri: `file://${filePath}`,

name: 'user_audio.wav',

type: 'audio/wav',

});

const response = await fetch('https://your-backend.com/transcribe', {

method: 'POST',

body: formData,

});

const result = await response.json();

console.log('Transcript:', result.text);

};Displaying Transcript in Real Time

const [transcript, setTranscript] = useState('');

const handleTranscription = async () => {

const path = await AudioRecord.stop();

const result = await uploadAudio(path);

setTranscript(result.text);

};

<Text>{transcript}</Text>Benefits over @react-native-voice/voice

- Works on Android 6.0+

- Backend-agnostic (Whisper, Google, etc.)

- Full customization of audio flow

- Works even offline with Whisper

- Doesn’t support live streaming (yet)

Limitations to Keep in Mind

- Needs a transcription backend

- Slight delay in response

- More implementation work (but worth it!)

Ready to Fix Voice Issues in Your React Native App?

Enhance your app’s voice capabilities across all Android versions with stable, scalable solutions. Our React Native specialists can help you integrate and optimize Audio Recorder Player seamlessly.

Conclusion

If you’re building apps for a diverse user base—including those using older Android devices—relying solely on native speech APIs can lead to inconsistent experiences. Switching to react-native-audio-recorder-player offers a more reliable and customizable approach by letting you record raw audio and process it with any speech-to-text engine, from Whisper to Google Cloud.

This method gives you full control over the voice workflow, ensures better cross-version support, and future-proofs your app against breaking API changes.

To implement this seamlessly and scale your voice-enabled features, consider working with experienced React Native developers who can help you integrate, optimize, and maintain your custom voice solutions with best practices in performance, permissions, and UX.

Frequently Asked Questions (FAQ)

Q1: Why not just fix @react-native-voice/voice instead of replacing it?

A: The issues are deeply rooted in native Android APIs, especially on older versions. Fixing them often requires extensive custom native code or doesn’t work at all on some devices.

Q2: Does react-native-audio-recorder-player work on iOS as well?

A: Yes, it supports both iOS and Android. However, you’ll still need a transcription backend (like Whisper or Google Cloud).

Q3: Can I use this setup for real-time voice recognition?

A: Not directly. This approach works best for short utterances. For real-time streaming, consider integrating a WebSocket backend or native STT engine.

Q4: Is Whisper API free?

A: OpenAI Whisper API is paid, but the local Whisper model is open-source and can run on-device with proper setup.

Q5: What audio format is best for transcription services?

A: WAV (PCM 16-bit mono, 16000Hz) is recommended. It’s the most compatible with STT engines like Whisper and Google.

Q6: How can I detect when the user stops speaking?

A: You can use silence detection libraries or monitor audio levels during recording to auto-stop after a pause

30 mins free Consulting

30 mins free Consulting

10 min read

10 min read

USA

USA

Love we get from the world

Love we get from the world